Making decisions is hard. When you choose a restaurant, do you fall back on favorites or try something new? With the regular spots you know what you’re getting, so you’re lowering your chance of regret. But you could be missing out on something better. On the other hand, exploring a new restaurant increases your risk of suffering through sad and soggy scallion pancakes. We call the former behavior “exploitation” and the latter “exploration” -- essentially, exploiting the information you have versus exploring for new data. Exploitation and exploration have to be balanced for you to have a decent shot at sustainable, successful decision-making.

In online advertising, we may face the problem of choosing between different bidding models or strategies for an ad campaign. How should one balance exploitation and exploration to achieve the best performance?

We recently published a paper on how to address this problem with our Automatic Model Selector (AMS). It’s a system for scalable online selection of bidding strategies based on live performance metrics. Yes, a human can set up multiple bidding strategies -- but AMS can choose the right one at the right time as needed to maximize performance. It automatically balances explore-exploit.

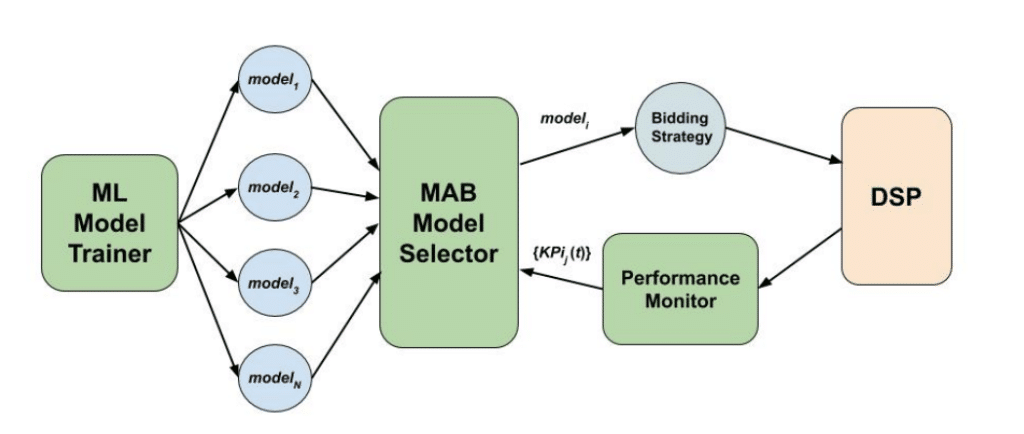

The system employs Multi-Armed Bandits (MAB) to continuously run and evaluate multiple models against live traffic, allocating the most traffic to the best performing model while decreasing traffic to those with poorer performance. It explores by giving non-zero traffic to all models so that each model can be evaluated, and exploits by putting most traffic to the model that performs best. The extent of exploitation increases over time as the system gains more confidence on which model performs best. This figure gives an overview of the components for the AMS system:

Figure 1. Components for the AMS system. ML Model Trainer provides trained ML models as the arms available to the MAB algorithm, run by MAB Model Selector. The selected model powers a bidding algorithm for a live RTB campaign running on a DSP. The campaign’s performance KPIs are tracked by the Performance Monitor, based on which the selection probabilities for the arms are updated. Models are swapped every 15 mins and performance KPIs are updated once a day.

Compared to the traditional model evaluation process that depended heavily on humans, AMS has a few advantages:

- AMS regularly and automatically evaluates the model performances with online data using your media metrics (e.g., CTR, CPC, etc.). This avoids the possibility of relying on old or stale data as well as the inconsistency between media metrics and machine learning metrics.

- AMS is flexible. It performs model selection for each campaign individually instead of choosing one model and applying it across all campaigns. Simpler models may perform better for small campaigns, while complex models may be needed for larger campaigns. AMS can find the model that works best for each campaign depending on the specific advertiser or market situation.

- AMS is scalable. It saves time by being self-sufficient at running controlled experiments and allows people to focus on high level strategy. AMS evaluates model candidates on a case-by-case basis and systematically applies findings to shift models.

This system was demonstrated to be effective in initial online experiments. While AMS is not yet reflecting in our live product, this research is fueling our thought process for innovations to come. If you’re interested in learning more about the details of AMS, or the online experiment results, you can find the information in our published paper: Online and Scalable Model Selection with Multi-Armed Bandits.